Revisiting FullHD X11 desktop performance of the Allwinner A10

In my previous blog post, I was talking about a pathologically bad Linux desktop performance with FullHD monitors on Allwinner A10 hardware.

A lot of time has passed since then. Thanks to the availability of Rockchip sources and documentation, we have learned a lot of information about the DRAM controller in Allwinner A10/A13/A20 SoCs. Both Allwinner and Rockchip are apparently licensing the DRAM controller IP from the same third-party vendor. And their DRAM controller hardware registers are sharing a lot of similarities (though unfortunately this is not an exact match).

Having a much better knowledge about the hardware allowed us to revisit this problem, investigate it in more details and come up with a solution back in April 2014. The only missing part was providing an update in this blog. At least to make it clear that the problem has been resolved now. So here we go...

The most likely culprit (DRAM bank conflicts)

A lot of general information about how DRAM works is readily available in the Internet at various places [1] [2]. Not to mention the official JEDEC DDR3 specification and all the datasheets from the DDR3 chip vendors.

The DDR3 memory is typically organized as 8 interleaved banks. Only one page can be open in each bank at the same time. If two competing DRAM users (such as the CPU and the display controller) are occasionally trying to access different pages from the same bank, then there is a significant performance penalty because the previous page needs to be closed first (this takes tRP cycles), then the next page needs to be open (this takes tRCD cycles) and finally have an additional tCAS cycles delay before the back-to-back bursts can be served from the newly open page at the tCCD rate.

Now we only need to know how bad are the bank conflicts in practice and whether they can explain the performance problems earlier observed on the Cubieboard and Mele A2000 devices when they were driving a FullHD monitor.

What we know about the hardware

The Cubieboard default DRAM timing values for tRP (6), tRCD (6) and tCCD (4) can be easily identified by decoding the SDR_TPR0 hardware register bitfields. The page size is 4096 in the Cubieboard (two 2048 sized pages from two 16-bit DRAM chips combined), which means that any physical addresses that differ by a multiple of 32K bytes belong to the same bank. The 32-bit data bus width allows to transfer 32 bytes of data per each 8-beat burst.

Additionally, we can have a look at some of the listed Synopsys DesignWare® DDR2/3-Lite SDRAM Memory Controller IP features:

* Includes a configurable multi-port arbiter with up to 32 host ports using Host

Memory Interface (HMI), AMBA AHB or AMBA 3 AXI

* Command re-ordering and scheduling to maximize memory bus utilization

* Command reordering between banks based on bank status

* Programmable priority arbitration and anti-starvation mechanisms

* Configurable per-command priority with up to eight priority levels; also

serves as a per-port priority

* Automatic scheduling of activate and precharge commandsAnd compare these features with the 'arch/arm/mach-sun7i/include/mach/dram.h' header file from the linux-sunxi kernel (this is the only information available to us about the DRAM controller host ports):

typedef struct __DRAM_HOST_CFG_REG{

unsigned int AcsEn:1; //bit0, host port access enable

unsigned int reserved0:1; //bit1

unsigned int PrioLevel:2; //bit2, host port poriority level

unsigned int WaitState:4; //bit4, host port wait state

unsigned int CmdNum:8; //bit8, host port command number

unsigned int reserved1:14; //bit16

unsigned int WrCntEn:1; //bit30, host port write counter enable

unsigned int RdCntEn:1; //bit31, host port read counter enable

} __dram_host_cfg_reg_t;

typedef enum __DRAM_HOST_PORT{

DRAM_HOST_CPU = 16,

DRAM_HOST_GPU = 17,

DRAM_HOST_BE = 18,

DRAM_HOST_FE = 19,

DRAM_HOST_CSI = 20,

DRAM_HOST_TSDM = 21,

DRAM_HOST_VE = 22,

DRAM_HOST_USB1 = 24,

DRAM_HOST_NDMA = 25,

DRAM_HOST_ATH = 26,

DRAM_HOST_IEP = 27,

DRAM_HOST_SDHC = 28,

DRAM_HOST_DDMA = 29,

DRAM_HOST_GPS = 30,

} __dram_host_port_e;The actual DRAM controller host port settings are presented as tables in the u-boot bootloader. The priority of the DRAM_HOST_BE (Display Engine Backend) is set higher than the priority of DRAM_HOST_CPU (CPU).

All of this information is sufficient to implement a simple software simulation.

Software simulation

The following quickly hacked ruby script tries to model the competition between the display controller and the CPU, also taking into account DRAM bank conflict penalties whenever they happen. In this model, we assume that the display controller has the highest priority and attempts to do standalone 32-byte burst reads at regular intervals whenever possible. And all the leftover memory bandwidth is consumed by the CPU. Below are the simulation results produced by this script:

| Memory clock speed | ||||||

|---|---|---|---|---|---|---|

| Video mode | 360MHz | 384MHz | 408MHz | 432MHz | 456MHz | 480MHz |

| 1920x1080, 32bpp, 60Hz | 499 MB/s | 500 MB/s | 500 MB/s | 500 MB/s | 500 MB/s | 500 MB/s |

| 1920x1080, 32bpp, 56Hz | 466 MB/s | 466 MB/s | 466 MB/s | 466 MB/s | 466 MB/s | 1950 MB/s |

| 1920x1080, 32bpp, 50Hz | 416 MB/s | 416 MB/s | 416 MB/s | 1858 MB/s | 1244 MB/s | 2071 MB/s |

| 1920x1080, 24bpp, 60Hz | 375 MB/s | 1502 MB/s | 809 MB/s | 1864 MB/s | 1864 MB/s | 1865 MB/s |

| 1920x1080, 24bpp, 56Hz | 1463 MB/s | 1129 MB/s | 1687 MB/s | 1740 MB/s | 1740 MB/s | 1177 MB/s |

| 1920x1080, 24bpp, 50Hz | 1553 MB/s | 1553 MB/s | 1553 MB/s | 1337 MB/s | 1919 MB/s | 2383 MB/s |

| Memory clock speed | ||||||

|---|---|---|---|---|---|---|

| Video mode | 360MHz | 384MHz | 408MHz | 432MHz | 456MHz | 480MHz |

| 1920x1080, 32bpp, 60Hz | 1496 MB/s | 1496 MB/s | 1595 MB/s | 1920 MB/s | 2206 MB/s | 2492 MB/s |

| 1920x1080, 32bpp, 56Hz | 1397 MB/s | 1578 MB/s | 1843 MB/s | 2129 MB/s | 2422 MB/s | 3235 MB/s |

| 1920x1080, 32bpp, 50Hz | 1600 MB/s | 1887 MB/s | 2193 MB/s | 2888 MB/s | 2889 MB/s | 2889 MB/s |

| 1920x1080, 24bpp, 60Hz | 1869 MB/s | 2599 MB/s | 2600 MB/s | 2600 MB/s | 2601 MB/s | 2601 MB/s |

| 1920x1080, 24bpp, 56Hz | 2426 MB/s | 2426 MB/s | 2427 MB/s | 2427 MB/s | 2428 MB/s | 2428 MB/s |

| 1920x1080, 24bpp, 50Hz | 2167 MB/s | 2167 MB/s | 2167 MB/s | 2168 MB/s | 2168 MB/s | 2364 MB/s |

The results from the two tables above can be directly compared with the actual experimental data from the previous blog post. The simulation model is very limited and does not take into account all the possible factors limiting the memory bandwidth, so the green cells in the tables with simulated results tend to be overly optimistic. But we are only interested in the crossover point, where the performance becomes pathologically bad (the red table cells). And the simulation seems to be reasonably accurately representing the memset performance drop.

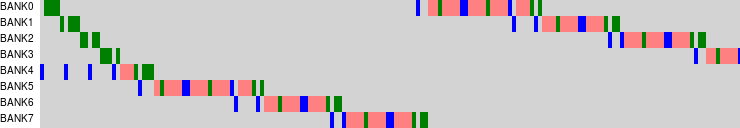

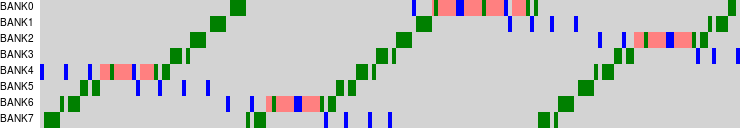

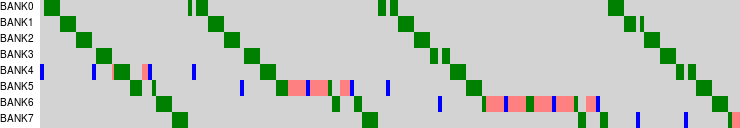

Timeline charts

As an additional bonus, below are the timeline charts of the simulated memory accesses from the display controller (shown as blue), accesses from the CPU (shown as green) and the cycles wasted on the bank switch penalties (shown as red). When generating these charts, the page size had been artificially reduced to 128 bytes for illustrative purposes. The behaviour with 4096 bytes per page does not differ much, but just needs much larger timeline chart pictures, which would not fit here well.

The chart 1 demonstrates one of the configurations with a pathological memset performance drop. The CPU memory accesses from memset quickly catch up with the framebuffer scanout and start fighting for the same bank. The gaps between the memory accesses from the display controller are too small and don't allow switching to a different page in the bank, performing more than one burst by the CPU and switching back to serve the next request from the display controller in time. As a result, the CPU and the display controller can't escape each other and keep progressing at roughly the same slow speed (~500 MB/s). Most of the memory bandwidth is wasted on the pointless page switch overhead.

Another important thing is that in reality the display refresh also gets somewhat disrupted. On Allwinner A10 hardware it manifests itself as occasional screen shaking up/down and does not look very nice.

The chart 2 demonstrates backwards memset behaviour. As the CPU is walking memory in the opposite direction, bank conflicts with the display controller do not last long and, after escaping, the CPU is able to circle through all the banks until clashing with the display controller again. The overall performance is much better compared to what we had with the chart 1.

The chart 3 demonstrates much less demanding display refresh bandwidth (color depth and refresh rate are both reduced) and a higher DRAM clock speed. Unlike what happened on chart 1, now the memory accesses from the CPU (green) are able to slip between the memory accesses from the display controller (blue). The performance is good.

Analysis of the results and a solution for the problem

In fact, we have no idea what is really happening in the DRAM controller, because we have neither proper tools nor good documentation. But the selected software simulation model appears to provide somewhat reasonable results, which are consistent with the previously collected experimental data. So until proven otherwise, we just assume that this model is correct.

It looks like the root cause of the severe memory performance drop and screen shaking glitches on the HDMI monitor is that the display controller in Allwinner A10 is not particularly well behaved. Having higher priority for it means that the DRAM controller must serve the requests from the display controller as soon as they arrive without trying to postpone and/or coalesce them into larger batches in order to minimize bank conflict penalties. Reducing the priority of the display controller host ports and making it the same as the CPU/GPU actually improves the situation a lot, because the DRAM controller is now free to schedule memory accesses. At least the memset performance gets much better.

But what about the screen shaking HDMI glitches? If there is some kind of buffering in the display controller, then we do not really care about high priority and minimizing latency of memory accesses, but only need to ensure that the average framebuffer scanout bandwidth is sufficient. Does such buffering exist? Unfortunately this does not seem to be the case for DEBE (Display Engine Backend) and reducing the DRAM_HOST_BE priority makes the HDMI glitches much worse (for example, even moving the mouse cursor is enough to cause a disruption). However the DEFE (Display Engine Frontend), which is responsible for scaling the hardware layers, seems to implement some sort of buffering. So a reduction of the DRAM_HOST_FE priority does not cause troubles with the HDMI signal for scaled layers, while resolving the memory performance problems!

To sum it up, we have a solution. However it is not perfect. Scaled DEFE layers now work great, but there are only two of them. And ordinary non-scaled DEBE layers still remain broken.

Benchmarking on real hardware

A10-OLinuXino-LIME development board has only 16-bit DRAM bus width. And driving a FullHD monitor is even more challenging for it, when compared to the other Allwinner A10 devices. So it is an interesting hardware to test. And this time testing involves the glmark2-es2 3D graphics benchmark, which is run with proprietary Mali400 drivers:

| Monitor resolution and refresh rate (32bpp X11 desktop) | ||||||

|---|---|---|---|---|---|---|

| Allwinner A10 based device | 1280x720p50 | 1280x720p60 | 1920x1080p50 | 1920x1080p60 | ||

| Mele A2000, 32-bit DDR3 @360MHz (before the fix) | 151 | 148 | 140 | 136 | ||

| Cubieboard, 32-bit DDR3 @480MHz (before the fix) | 166 | 166 | 161 | 157 | ||

| A10-OLinuXino-LIME, 16-bit DDR3 @480MHz (before the fix) | 100 | 91 | 56 | 48 | ||

| A10-OLinuXino-LIME, 16-bit DDR3 @480MHz (after the fix) | 114 | 110 | 94 | 85 | ||

The last row in the table shows the performance improvement after switching to the use of DEFE layers (scaler mode) and applying the DRAM host ports priority tweak to u-boot. This tweak has already made it to the mainline u-boot.

The future KMS driver will probably need to have some special plane properties to resolve the problem for Allwinner A10. The newer Allwinner A20 SoC is not affected.

The usual and completely unsurprising thing is that both NEON optimized 2D software rendering and 3D acceleration like fast memory very much. Having fast memory is pretty much critical for running a linux desktop system on ARM hardware.

References

- Everything You Always Wanted to Know About SDRAM (Memory): But Were Afraid to Ask (anandtech.com)

- DDR2 - An Overview (lostcircuits.com)